From Emergent Behavior to Governable Systems

Closing the Gap Between Simulation Power and Operational Trust

Modern engineering organizations can simulate almost anything.

Aerospace systems, defense platforms, energy infrastructure, and advanced manufacturing all rely on large-scale simulations that are high-fidelity, high-dimensional, and visually impressive. Yet despite this power, a familiar problem keeps surfacing:

The simulation works — until it matters.

Behavior looks correct under nominal conditions, but confidence degrades near boundaries, during handoffs, after modifications, or under external scrutiny. Results become harder to explain, harder to defend, and harder to trust over time.

This isn't a tooling problem. It's a system closure problem.

⸻

The Hidden Failure Mode of Complex Systems

- The system exhibits emergent behavior that cannot be reasoned about directly.

- Control or tuning is applied reactively rather than structurally.

- Validation focuses on outputs, not failure envelopes.

- Governance is informal or implicit.

- Over time, meaning drifts — even when performance appears unchanged.

The result is silent failure: the system appears to behave correctly while gradually leaving its intended regime.

What's missing is not more simulation capability, but a way to take responsibility for the system's meaning.

⸻

What We Did Differently

We set out to answer a simple but uncomfortable question:

What would it take to turn a powerful simulation into something an organization can rely on without surprises?

- High dimensionality

- Partial observability

- Emergent regimes

- Safety-critical boundaries

- Long operational lifetimes

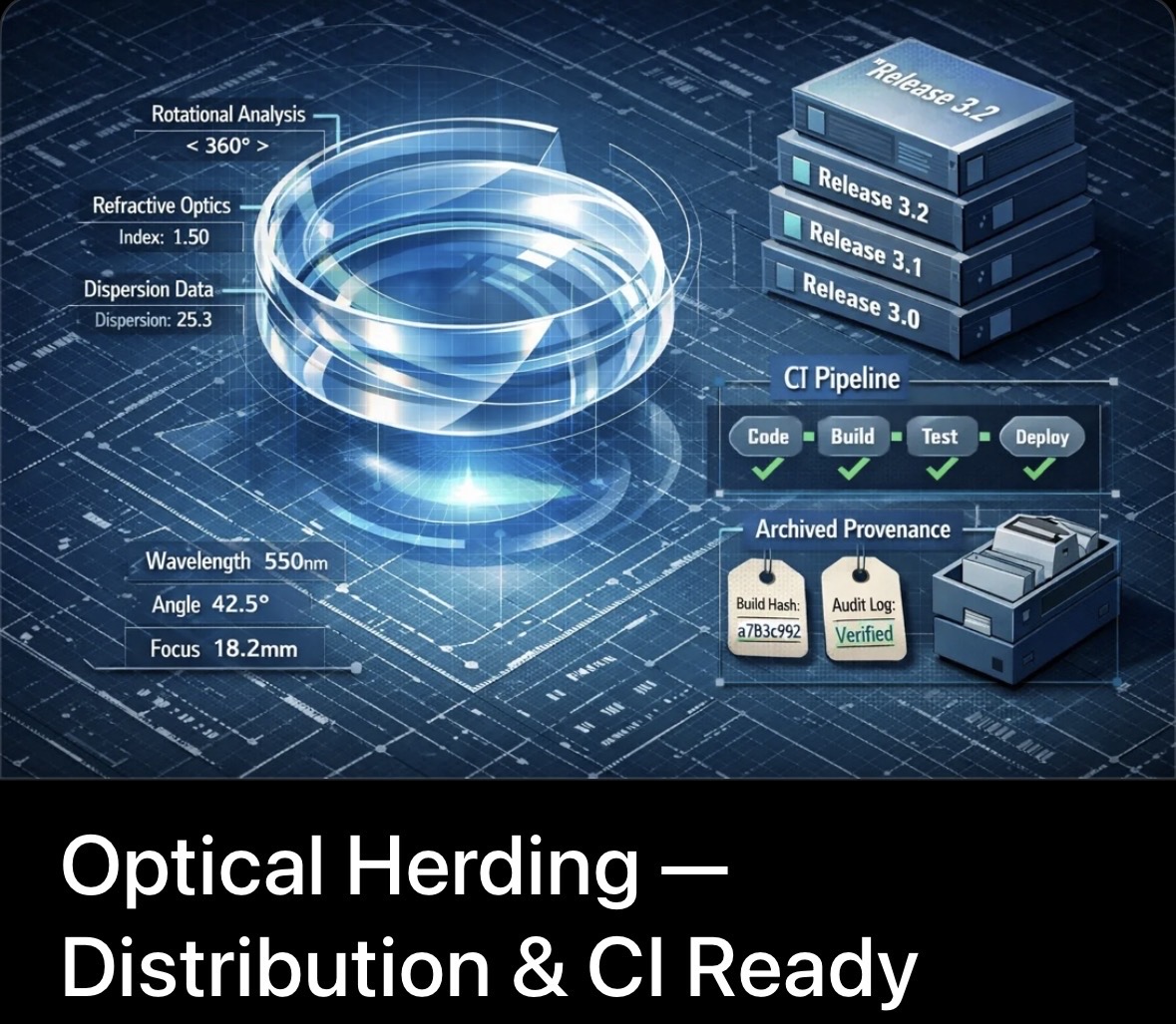

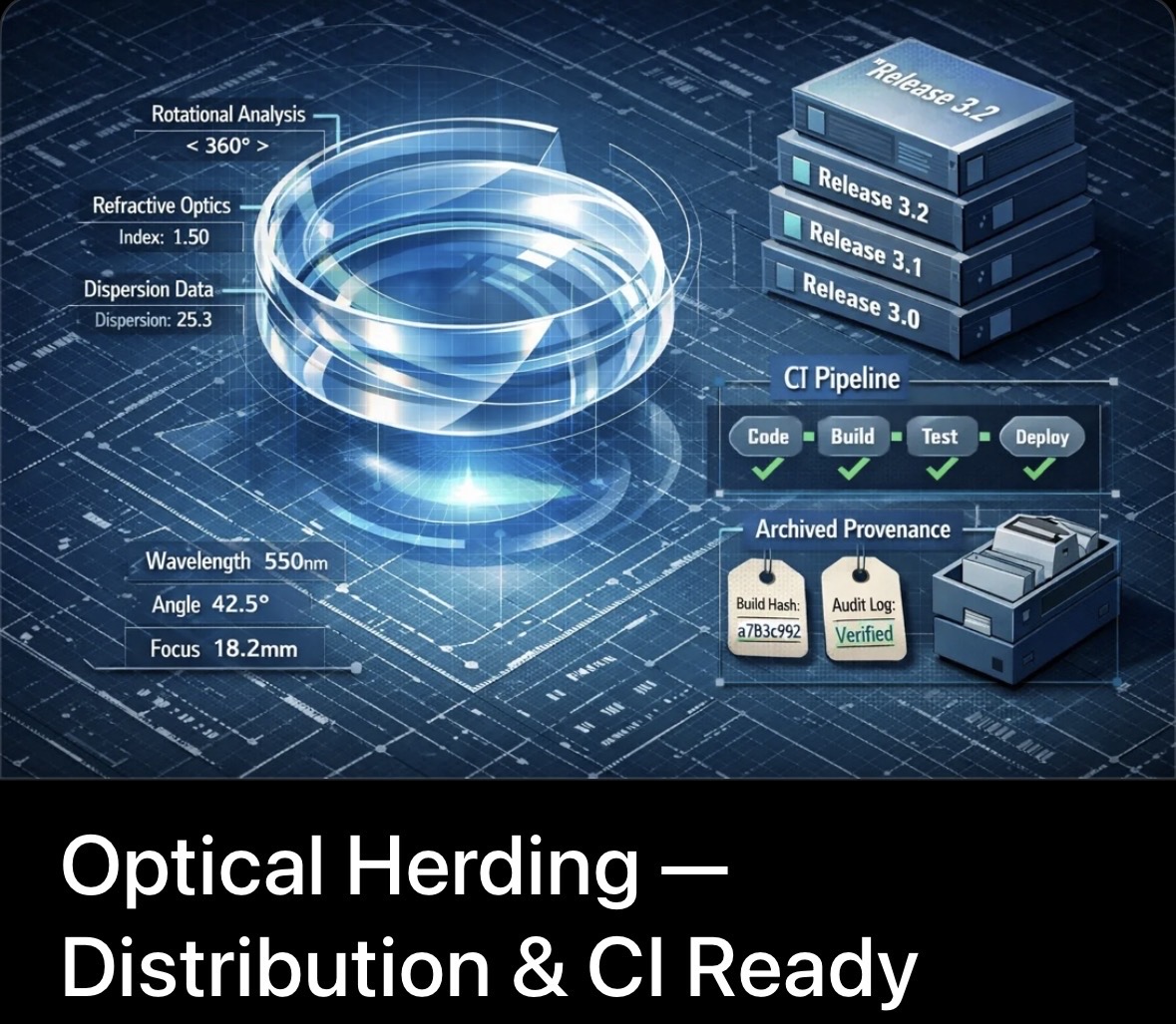

The specific system was a 3D rotational refractive geometry that produces optical herding — collective organization of ray ensembles driven purely by geometry. No learning. No stochastic control. No ray–ray interaction.

What mattered was not the phenomenon itself, but the closure process applied to it.

⸻

Step 1: Prove the System Is Controllable (Not Fragile)

Emergent behavior is often dismissed as delicate or uncontrollable.

- Metrics summarizing system behavior were measured in real time

- Geometry was adjusted through bounded, interpretable control signals

- Strict timescale separation prevented numerical or control artifacts

The result was a system that could stabilize, recover from disturbance, suppress unsafe regimes, and behave predictably under feedback — all without micromanaging individual trajectories.

This matters because controllability is the gate. If a system cannot be safely regulated, nothing else downstream is meaningful.

⸻

Step 2: Reduce the System So It Can Be Explained

Control alone is not enough. If you cannot explain why control works, trust is temporary.

We collapsed the full high-dimensional behavior into a small set of order parameters that captured the majority of regime variance. In practice, the system's dynamics lived on a low-dimensional manifold with identifiable attractors, separatrices, and failure paths.

- Map regimes instead of tuning parameters

- Predict instability instead of reacting to it

- Explain behavior to someone who didn't build the model

This is where many simulations stop being "impressive" and start being useful.

⸻

Step 3: Certify Correctness and Failure — Explicitly

Most validation answers the question: "Does this look right?"

We instead asked: "Where does this break, and how do we know?"

- Geometric invariants

- Dynamical stability conditions

- Reduced-model consistency

- Regime classification correctness

- Control safety and saturation behavior

- Backend and hardware equivalence

Crucially, all known breakdowns were predicted, not emergent.

At this point, the system was no longer just working — it was bounded.

⸻

Step 4: Lock Meaning Over Time

Certified systems still decay.

People change. Toolchains change. Hardware changes. Meaning drifts unless it is actively defended.

- Explicit change-control rules for behavioral vs non-behavioral modifications

- Immutable, versioned release artifacts with provenance

- Continuous integration that enforces regime-level invariants, not just builds

- Ongoing validation to detect drift before release

The system now protects its own validity. Correctness is not assumed — it is enforced.

⸻

Why This Matters Beyond Optics

Nothing about this methodology is specific to light rays.

- **Aerospace** — aerodynamic regimes, stability envelopes, flight control boundaries

- **Defense** — sensing pipelines, autonomy stacks, engagement models

- **Energy** — thermal systems, grid dynamics, reactor simulations

- **Advanced manufacturing** — multiphysics processes with tight tolerances

- complex simulations,

- partial observability,

- emergent behavior,

- and long lifecycles,

…the limiting factor is not fidelity. It is closure.

⸻

How This Applies in Practice

In real engagements, this work looks like: 1. Entering an existing model or simulation 2. Identifying reduced explanatory structure 3. Mapping regimes, limits, and failure modes 4. Establishing acceptance and no-go criteria 5. Locking governance so meaning survives handoff and change

Organizations often have pieces of this capability spread across teams. They rarely have it end-to-end.

That gap is where systems fail — and where this approach applies.

⸻

A Complete Case Study

The full end-to-end closure of the optical herding system — from emergent behavior through control, theory, certification, governance, and automated stewardship — is documented in detail here:

System Closure Case Study: Governable Optical Herding [https://zenodo.org/records/18269153](https://zenodo.org/records/18269153)

This case study is not presented as a novelty demonstration, but as a concrete example of how complex systems can be made reliable, explainable, and durable over time.

⸻

Closing Thought

Complex systems don't fail because they are too complex.

They fail because no one takes responsibility for their meaning once they exist.

Closing that gap — between simulation power and operational trust — is the work.